Bring up bootstrap node and master node

Ensure Customer IP is reachable to the data switch.

Ensure BIOS settings are applied on all the servers.

- Ensure that all the required artifacts are available in /var/nps/ISO folder of NPS toolkit VM. For more information about the artifacts, see Download RHOCP and HPE Nimble CSI driver artifacts.

- Ensure that the proxy is not set in the environment variables. To disable the proxy, run the commands:

unset http_proxy unset https_proxy

-

To bring up the "image-service" pod for the installation of RHCOS on bootstrap and master nodes, run the following command from the NPS toolkit VM.

nps baremetal -a install -nos <nos_type> -l debugWhere,

nos- is Network Operating System and the supported<nos_type>iscumulusoraruba.NOTE:It will take a few minutes to complete the Installation. For any installation issue, check the log file

/var/nps/logs/<topology_name>/baremetal_install.logand take necessary steps. -

After the Image service pod is up and running, power on the bootstrap node to install. To power on the bootstrap node with onetime PXEboot, run the following command:

nps baremetal -a temp-pxeboot -node bootstrap -l debugThe bootstrap node is powered on and the installation starts.NOTE:For any RHCOS installation (installing operating system) issue on bootstrap node, see Installing image service on RHCOS nodes is not complete.

-

Verify bootstrap of master cluster using the following substeps

After the bootstrap machine is successfully booted, login to bootstrap node from NPSVM as core user.

- Run the following command to verify bootstrap verification:

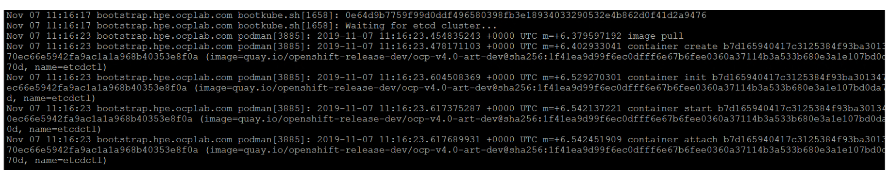

journalctl -b -f -u bootkube.service - Wait till the following message is displayed.

Waiting for etcd cluster…

-

After bootstrap node is up and running, power on the master node to install and configure the master cluster. To power on the master node with onetime PXEboot run the following command,

nps baremetal -a temp-pxeboot -node master -l debugThe master node is powered on and the installation starts.NOTE:For any RHCOS installation (installing operating system) issue on master node, see Installing image service on RHCOS nodes is not complete .

-

Open a new session for the NPS toolkit VM and check master node status using the following commands:

SSH to bootstrap VM from bastion VM(nps toolkit vm) as core user Run #journalctl -b -f -u bootkube.service Search for the string 'bootkube.service complete' -

To verify the master node cluster status, login to the NPS toolkit VM using the following commands:

export KUBECONFIG=/var/nps/ISO/ign_config/auth/kubeconfig openshift-install wait-for bootstrap-complete --log-level=infoCompletion of master node cluster installation results in the following message INFO It is now safe to remove the bootstrap resources.

-

To verify and update the status of the node, run the following command:

nps show --service baremetal -

After successful installation of master cluster, run the following command to delete the image service pod,

nps baremetal -a delete -nos <nos_type>Where,

nos- is Network Operating System and the supported<nos_type>iscumulusoraruba. - Remove the bootstrap entry from both the Load Balancers and add worker node entry in the workers list as the bootstrap node as worker node is reconfigured.

- Update bootstrap entry in DNS to worker node entry (Update the bootstrap hostname to worker node) as the bootstrap node as worker node is reconfigured.