Starting up rack, switches, and VIM nodes

- Manually connect the rack power cable to the power supply.

- Manually power on the HPE FlexFabric 5900 switch and HPE StoreFabric SN2100M switches.

Powering up the Undercloud host and VM

- Log in to the iLO console of the Undercloud host and power it on.

-

After the Undercloud host is up, to verify that the Undercloud VM is auto-started and is in

"running"state, run the following command from the Undercloud host:virsh list --all -

If the Undercloud VM is not running, to start the VM run the following command:

- To start the VM, run the following command:

virsh start undercloud - To reverify whether the Undercloud VM is in

"running"state, run the following command:virsh list --all

- To start the VM, run the following command:

-

To verify that the NTP service is running in the Undercloud host, run the command:

systemctl status ntpd.serviceNOTE:The status must be displayed as

"active (running)". -

If the NTP service is not running in the Undercloud host, run the following command to start the service and reverify the status.

systemctl restart ntpd.service -

To check for synchronization status of the NTP server, run the following command:

ntpstatNOTE:Ensure that the synchronization status is displayed as "synchronized".

If not synchronized, restart the

ntpdservice and wait for some time. Check the synchronization using the commandntpstat. -

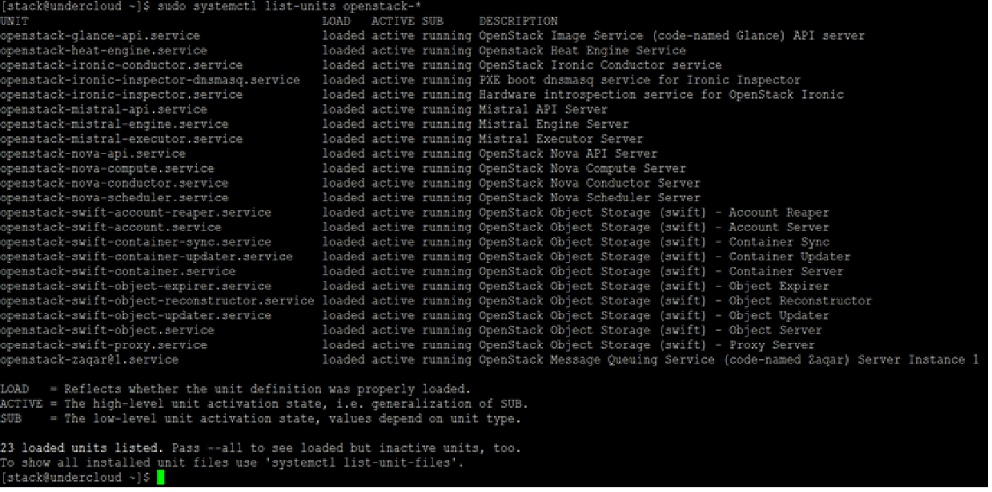

To verify the status of the Undercloud services running in the Undercloud VM, do the following:

- Log in to the Undercloud VM with username "stack".

- To verify that all the services are running, run the following command:

sudo systemctl list-units openstack-*

-

To verify that the NTP service is running in the Undercloud VM and is in synchronization with the referenced NTP server, do the following:

- To check the status of the NTP service, run the following command:

sudo systemctl status ntpd.service - If the NTP service is not running, to start the service, run the following command:

sudo systemctl restart ntpd.service - To verify the synchronization status, execute the following command:

ntpstatNOTE:Ensure that the synchronization status is displayed as

"synchronized".If not synchronized, restart the

ntpdservice and wait for some time. Check the synchronization using the using commandntpstat.

- To check the status of the NTP service, run the following command:

Powering up the Overcloud

-

Power on all the controllers.

Powering up the Overcloud for HCI-based deployment

Powering up the overcloud controllers

Log in to the Overcloud controllers using the iLO IP address and manually power on all the controllers.

- Log in to the Undercloud VM with username stack and to verify that the status of the Overcloud controller has changed to "power on", run the following command:

source stackrc openstack baremetal node listNOTE:Wait for some time for the controller nodes to power up and OS login console is visible in iLO.

- To verify that the Overcloud controller connectivity from the Undercloud VM, do an SSH to all the respective VIM controllers:

source stackrc nova list ssh heat-admin@<overcloud_controller_ip>Powering up the cluster service on controller

- Log in to the Overcloud controller as the "heat-admin" user, using the VIP address mentioned in the field "OS_AUTH_URL" of the overcloudrc file in the Undercloud VM.

source stackrc ssh heat-admin@<VIP IP address> - To verify the status of the cluster service on VIM controller, run the following command:

sudo pcs cluster status --all - If the cluster service is not running, to start the cluster service on the controller, run the following command:

sudo pcs cluster start --allPowering up the HCI nodes

To power up the HCI nodes, do the following:

Using iLO IP address, log in to the HCI nodes and manually power on all the HCI nodes.

- To verify that the status for the HCI nodes changes to power on, run the following command from the Undercloud VM:

source stackrc openstack baremetal node listNOTE:Wait for some time for the HCI nodes to power up and OS login console is visible in iLO.

- To verify the HCI node connectivity from the Undercloud VM, do an SSH to all the respective HCI nodes:

source stackrc nova list ssh heat-admin@<hci_ip>

Enabling fencing and resuming Ceph background operations

Log in to the Overcloud controller as the "heat-admin" user, using the VIP address mentioned in the field "OS_AUTH_URL" of the

overcloudrcfile in the Undercloud VM.NOTE:Execute step i only if fencing is enabled for HCI. If not, skip it and proceed to Step j.

- If fencing is enabled as part of overcloud deployment, enable it using the following command [Execute this step only if fencing is enabled for HCI]:

ssh heat-admin@<VIP IP address> sudo pcs property set stonith-enabled=true - To resume the Ceph backend operations, run the following commands one after the other from the controller:

ssh heat-admin@<VIP IP address> sudo ceph osd unset noout sudo ceph osd unset norecover sudo ceph osd unset norebalance sudo ceph osd unset nobackfill sudo ceph osd unset nodown sudo ceph osd unset pause exit

Powering up the Overcloud for Ceph based deployment

Powering up the overcloud controllers

Log in to the Overcloud controllers using the iLO IP address and manually power on all the controllers.

- Log in to the Undercloud VM with username stack and verify that the status of the Overcloud controller has changed to power on, run the following command:

source stackrc openstack baremetal node listNOTE:Wait for some time for the controller nodes to power up and the OS login console is visible in iLO.

- To verify that the Overcloud controller connectivity from the Undercloud VM, do an SSH to all the respective VIM controllers:

source stackrc nova list ssh heat-admin@<overcloud_controller_ip>Powering up the cluster service on controller

- Log in to the Overcloud controller as the "heat-admin" user, using the VIP address mentioned in the field "OS_AUTH_URL" of the overcloudrc file in the Undercloud VM:

source stackrc ssh heat-admin@<VIP IP address> - To verify the status of the cluster service on any VIM controller, run the following command:

sudo pcs cluster status --all - If the cluster service is not running, to start the cluster service on the controller, run the following command:

sudo pcs cluster start --allPowering up the Ceph nodes

To power up the Ceph nodes, do the following:

Using iLO IP address, log in to the Ceph nodes and manually power on all the Ceph nodes.

- To verify that the status for the Ceph nodes changes to power on, run the following command from the Undercloud VM:

source stackrc openstack baremetal node listNOTE:Wait for some time for the Ceph nodes to power up and the OS login console is visible in iLO.

- To verify the Ceph node connectivity from the Undercloud VM, do an SSH to all the respective Ceph nodes:

source stackrc nova list ssh heat-admin@<ceph_ip>

Powering up the Compute nodes

To power up the Compute nodes, do the following:

Using iLO IP address, log in to the Compute nodes and manually power on all the Compute nodes.

- To verify that the status for the Compute nodes changes to power on, run the following command from the Undercloud VM:

source stackrc openstack baremetal node listNOTE:Wait for some time for the compute nodes to power up and the OS login console is visible in iLO.

- To verify the Compute node connectivity from the Undercloud VM, do an SSH to all the respective Compute nodes:

source stackrc nova list ssh heat-admin@<compute_ip>

Enable fencing and Ceph background operations

- Log in to the Overcloud controller using VIP address and user "heat-admin". To enable fencing, execute the following steps:NOTE:

The VIP address is mentioned in the field OS_AUTH_URL in the

overcloudrcfile available in the Undercloud VM.ssh heat-admin@<VIP IP address> sudo pcs property set stonith-enabled=true - To verify that fencing property is enabled, execute the following command:

sudo pcs property show stonith-enabledThe property

"stonith-enabled"must be set to true. - To resume the Ceph backend operations, run the following commands one after the other from the controller:

sudo ceph osd unset noout sudo ceph osd unset norecover sudo ceph osd unset norebalance sudo ceph osd unset nobackfill sudo ceph osd unset nodown sudo ceph osd unset pause

Starting VMs in the Overcloud [Applicable for both HCI and Ceph based overcloud deployments]

-

To start the VMs running in the Overcloud, run the following command:

NOTE:

For RHOCP deployment on RHOSP, do not start the RHOCP master and worker VMs. Start other VMs apart from master and worker nodes if any.

source overcloudrc nova list nova start <VM_UUID>Where

<VM_UUID>indicates the list of the UUIDs (separated by a blank space) of all the VMs. -

To verify that all the VMs are running in the Overcloud controller, run the following command:

nova list -

If any of the OpenStack operations is failing, to check for services and restart the docker services do the following:

- Log in to the overcloud controller.

- To verify whether the Keystone service is running, run the following command:

[heat-admin@overcloud-controller-0 ~]$ sudo docker ps --filter "name=<service name>"[heat-admin@overcloud-controller-0 ~]$ sudo docker ps --filter "name=keystone"Status must display as:Healthy

- If the status of the preceding command is

unhealthy, run the following command:sudo docker restart <service_name>Recheck for the status that must display as:Healthy